-

Notifications

You must be signed in to change notification settings - Fork 1.3k

GSoC Ideas

MNE-Python is planning to participate in the GSOC 2021 under the 🐍 Python Software Foundation (PSF) umbrella.

Note: If you are not currently pursuing research activities in MEG or EEG and do not use or do not plan to use MNE-Python for your own research, our GSoC might not be for you. Our projects all require domain-specific interest and are not simple coding jobs.

MNE-Python is a pure Python package for preprocessing and analysis of M/EEG data. For more information, see our homepage.

For modes of communication see our getting help page. It's a good idea to introduce yourself on our Discourse forum to get in touch with potential mentors before submitting an application.

- MNE-Python must be in the title of your application!

- Student application information for Python

We list some potential project ideas below, but we welcome other ideas that could fit within the scope of the project!

Medium (requires knowledge of M/EEG data)

Denis Engemann, Alex Gramfort, Eric Larson, Daniel McCloy

The aim of this project is to improve the access to open EEG/MEG databases via the mne.datasets module, in other words, improve our dataset fetchers. There is physionet, but much more. Having a consistent API to access multiple data source would be great.

See https://github.com/mne-tools/mne-python/issues/2852 and https://github.com/mne-tools/mne-python/issues/3585 for some ideas, or:

- MMN dataset (http://www.fil.ion.ucl.ac.uk/spm/data/eeg_mmn/ ) used for tutorial/publications applying DCM for ERP analysis using SPM.

- Human Connectome Project Datasets (http://www.humanconnectome.org/data/ ). Over a 3-year span (2012-2015), the Human Connectome Project (HCP) scanned 1,200 healthy adult subjects. The available data includes MR structural scans, behavioral data and (on a subset of the data) resting state and/or task MEG data.

- Kymata Datasets (https://kymata-atlas.org/datasets). Current and archived EMEG measurement data, used to test hypotheses in the Kymata atlas. The participants are healthy human adults listening to the radio and/or watching films, and the data is comprised of (averaged) EEG and MEG sensor data and source current reconstructions.

- http://www.brainsignals.de/ A website that lists a number of MEG datasets available for download.

- BNCI Horizon (http://bnci-horizon-2020.eu/database/data-sets) has several BCI datasets

Medium (requires visualization and time-frequency analysis knowledge)

Mainak Jas, Britta Westner, Sarang Dalal, Denis Engemann, Alex Gramfort, Eric Larson

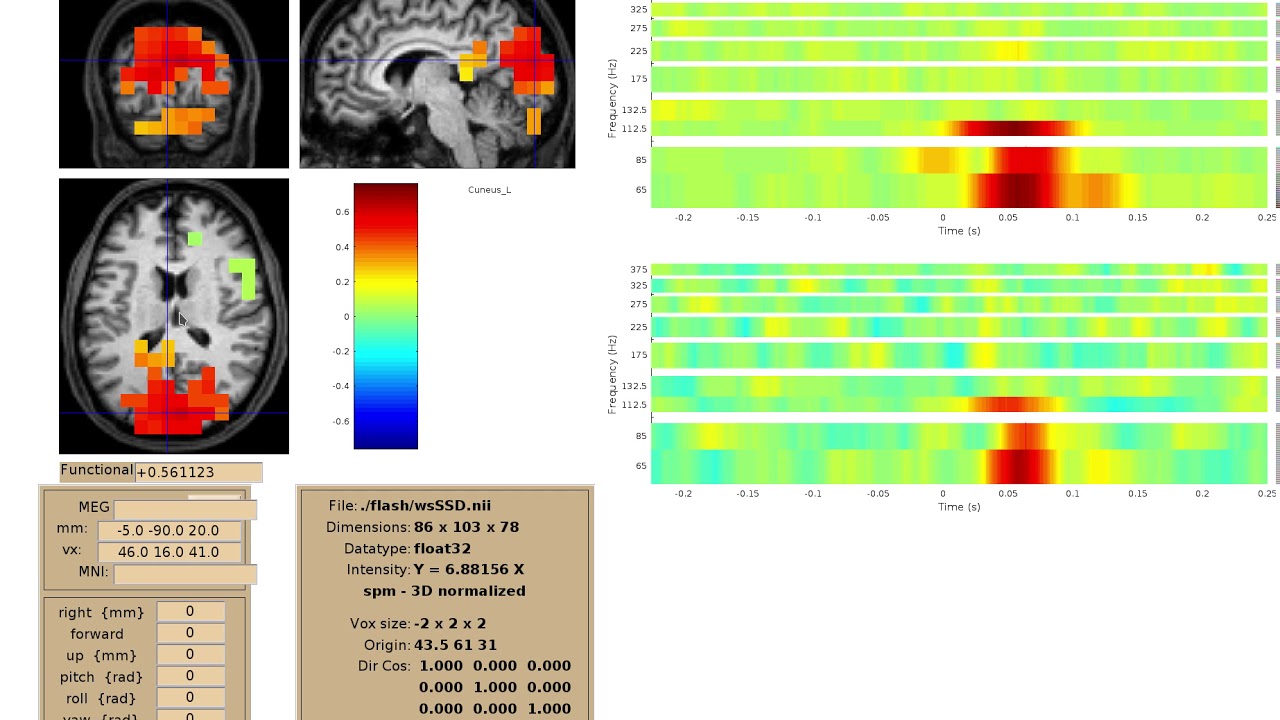

Implement viewer for interactive visualization of volumetric source-time-frequency (5-D) maps on MRI slices (orthogonal 2D viewer). NutmegTrip (written by Sarang Dalal) provides similar functionality in Matlab in conjunction with FieldTrip.

Example of NutmegTrip's source-time-frequency mode in action (click for link to YouTube):

- extend source time series (4-D) viewer created by Mainak Jas to a time-frequency (5-D) viewer

- Allow plotting surface data in volume using cortical ribbon (see closed glass brain PR: https://github.com/mne-tools/mne-python/pull/4496)

- capability to output source map animations directly as GIFs

- ability to plot on 3D rendered brains not necessary at this stage, but would be helpful if it is written such that it can be extended in the future

- extra credit: plot corresponding EEG/MEG topography at selected time point. (Not in video, but this can be done in NutmegTrip as well – very useful as a sanity check for source results.)

Hard (requires cloud computing and Python knowledge)

Currently, cloud computing with M/EEG data requires multiple manual steps, including remote environment setup, data transfer, monitoring of remote jobs, and retrieval of output data/results. These steps are usually not specific to the analysis of interest, and thus should be something that can be taken care of by MNE.

- Leverage

daskandjoblibor other libs to allow simple integration with MNE processing steps. Ideally this would be achieved in practice by:- One-time (or per-project) setup steps, setting up host keys, access tokens, etc.

- In code, switch to cloud computing rather than local computing via a simple change of

n_jobsparameter, and/or context manager likewith use_dask(...): ....

- Develop a (short as possible) example that shows people how to run a minimal task remotely, including setting up access, cluster, nodes, etc.

- Adapt MNE-biomag-group-demo code to use cloud computing (optionally, based on config) rather than local resources.

Medium (requires refactoring of code and user interaction testing)

mne coreg is an excellent tool for coregistration:

However, it is limited by being tied to Mayavi, Traits, and TraitsUI. We should make the code more general so that we can switch to more sustainable backends (e.g., PyVista).

We can refactor and improve things in several (mostly) separable steps:

- Refactor code to use traitlets

- GUI elements to use PyQt5 (rather than TraitsUI/pyface)

- 3D plotting to use our abstracted 3D viz functions rather than Mayavi

- Implement a PyVista backend

Medium (requires working with C++ bindings)

OpenMEEG is a state-of-the art solver for forward modeling in the field of brain imaging with MEG/EEG. It solves numerically partial differential equations (PDE). It is written in C++ with Python bindings written in SWIG. The ambition of the project is to integrate OpenMEEG into MNE offering to MNE the ability to solve more forward problems (cortical mapping, intracranial recordings, etc.).

- Revamp Python bindings (remove useless functions, check memory managements, etc.)

- Write example scripts for OpenMEEG that automatically generate web pages as for MNE

- Package OpenMEEG for Debian/Ubuntu

- Update the continuous integration system

Medium (requires experience with GUI toolkits such as Qt/PyQt)

MNE-Python currently offers a Matplotlib-based signal browser to visualize EEG/MEG time courses (raw.plot()). Although this browser gets the job done, it is rather slow (smooth scrolling is not possible) and uses custom (i.e. non-standard) GUI elements. The goal of this project is to implement an alternative signal browser that solves these issues, i.e. it should support smooth scrolling through the data and use standard GUI elements. The browser should also support working with annotations. If possible, Qt-based solutions should be preferred, and of course the result should be written in pure Python and work across platforms. Therefore, potential solutions include using PyQtGraph or writing custom Qt widgets.

In the long run, we want our Figure objects to have a flexible internal API that supports multiple drawing backends (matplotlib, qt/pyqtgraph, etc) as well as multiple GUI frontends (qt widgets, ipywidgets, etc). This project will implement one specific combination of GUI frontend and high-performance backend, and establish the abstraction necessary to add other frontends / backends in the future.

- Determine optimal toolkit/package to implement a high-performance signal browser

- Implement signal browser

- Write CI tests