-

Notifications

You must be signed in to change notification settings - Fork 45

Fine tune test generation

- Are your units solitary or sociable? Do you need mocking? What kind of

mocking if necessary?

UnitTestBot uses Mockito framework to control dependencies in your code during testing. Choose what you need or rely on the defaults.

Note: currently, mocking is not available if you allocate all the test generation time for Fuzzing (see Test generation method).

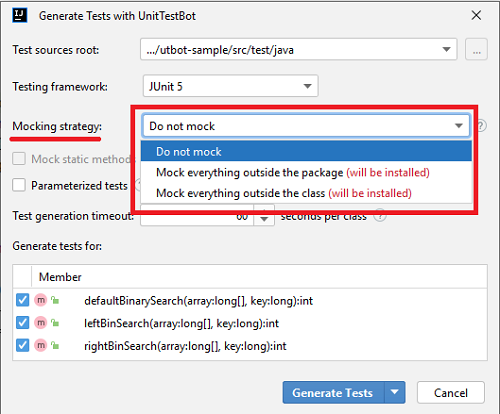

In the Generate Tests with UnitTestBot window choose your Mocking strategy:

- Do not mock — If you want the unit under test to actually interact with its environment, mock nothing.

- Mock everything outside the package — Mock everything around the current package except the system classes.

- Mock everything outside the class — Mock everything around the target class except the system classes.

Notes:

This setting is inactive when you enable Parameterized tests.

Please remember, that Classes to be forcedly mocked are mocked even if you choose to mock nothing or any other mocking option. This behavior is necessary for correct test generation. See Force mocking static methods for more information.

In the Generate Tests with UnitTestBot window you can choose to Mock static methods.

This option became available with Mockito 3.4.0. You can mock static methods only if you choose to mock either package or class environment. If you mock nothing, you won't be able to mock static methods as well, except those which are forcedly mocked.

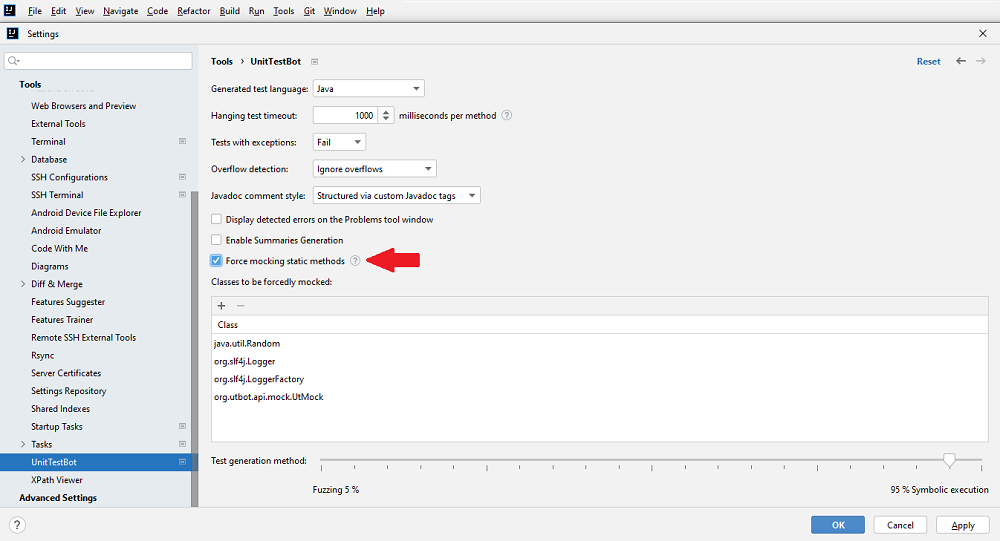

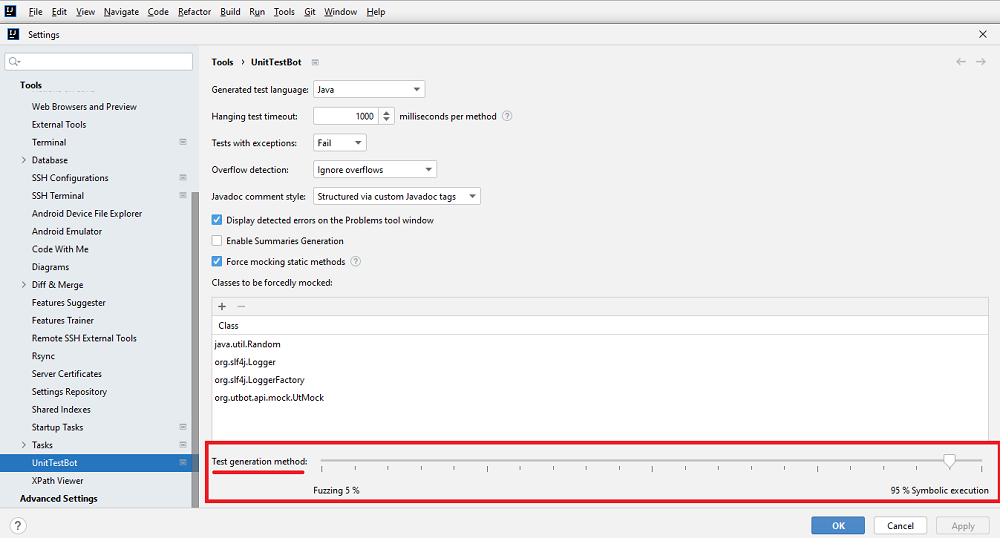

Go to File > Settings > Tools > UnitTestBot and choose to Force mocking static methods.

When enabled, it overrides all the other mocking settings.

It mocks the methods inside the Classes to be forcedly mocked even if the Mockito is not installed.

Keep this setting enabled until you are a contributor and want to experiment with UnitTestBot code.

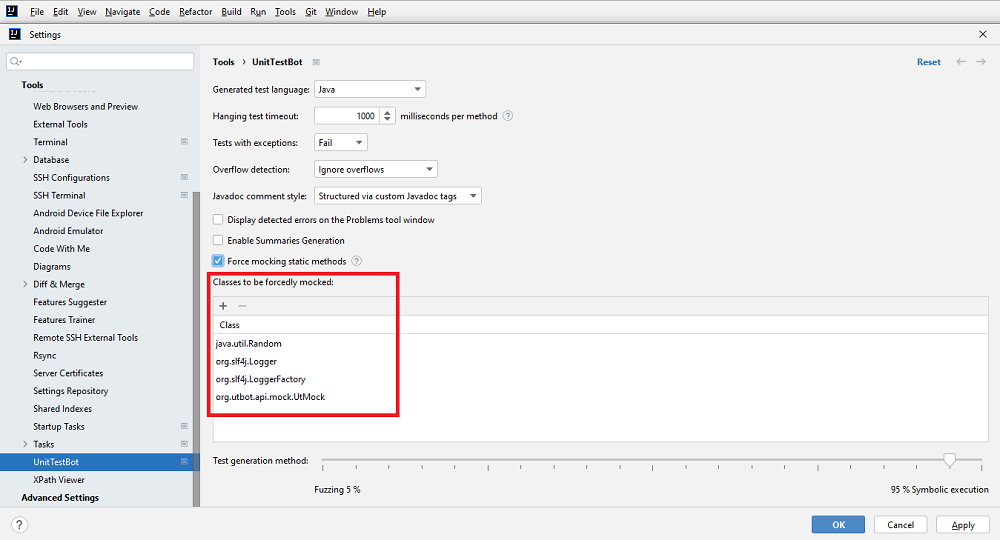

If you chose to Force mocking static methods, you'll see four classes among Classes to be forcedly mocked, which are always here:

They must be mocked for correct test generation.

You can add to this list. What should be forcedly mocked?

Even if you choose to mock nothing or mock the package environment, please make sure to forcedly mock random number generators, I/O operations and loggers to get valid test results.

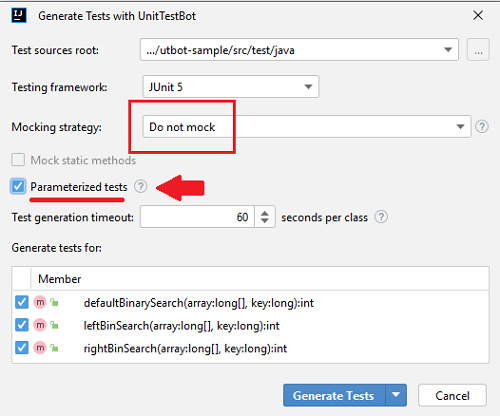

- Do you need to execute a single test method multiple times with different parameters?

You can enable Parameterized tests in the Generate Tests with UnitTestBot window.

In UnitTestBot, parameterized tests are available only for JUnit 5 or TestNG chosen as the testing framework. UnitTestBot does not support parameterization for JUnit 4.

UnitTestBot does not allow to use parameterization when mocking is enabled. Make sure Mocking strategy is set to Do not mock, otherwise parameterization option will be disabled.

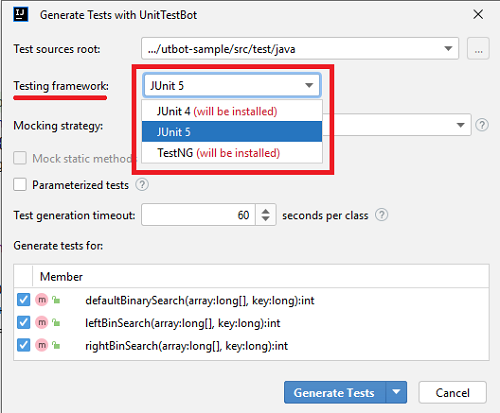

- Would you like to collect and run tests using JUnit 4, JUnit 5 or TestNG?

In the Generate Tests with UnitTestBot window choose the necessary Testing framework: JUnit 4, JUnit 5 or TestNG.

If you do not have some of them on your computer, UnitTestBot will install them for you.

Note: if you choose JUnit 4, you won't be able to activate Parameterized tests.

- Do you need to generate test methods in Kotlin for the source code written in Java or vice versa?

By default, UnitTestBot detects your source code language and generates test methods accordingly.

In File > Settings > Tools > UnitTestBot you can choose Generated test language to generate test methods in Java or Kotlin regardless of your source code language.

Generating tests in Kotlin is an experimental feature for now.

- Choose a folder to store your test code.

For your first test generation with UnitTestBot choose the Test sources root manually in the Generate Tests with UnitTestBot window. UnitTestBot will remember your choice for future generations.

If you re-generate the test for a given class or method, it will erase the previously generated test for this unit. To prevent this, rename or move the tests you want to keep.

If the Test sources root directory already contains handwritten tests or tests created with IntelliJ IDEA Create Test feature, make sure to rename or move these tests. UnitTestBot will overwrite them, if they have the same names as the generated ones.

If your project is built with Maven or Gradle, you will be able to choose only the pre-defined Test sources root options from the drop-down list due to a strict project directory layout. If necessary, please define custom Test sources root via Gradle or Maven by modifying the build file. If you use IntelliJ IDEA native build system you can mark any directory as Test Sources Root.

- Choose methods, classes or packages you want to cover with tests.

You can choose the scope for test generation both in the Project tool window or in the Editor.

- If you need to generate tests for a package or a set of classes, you can choose them all at once in the Project tool window.

- If you want to specify methods to be covered with tests inside the given class, you can choose the class in the

Project tool window or, alternatively, place the caret at the required class or method right in the Editor.

Then in the Generate Tests with UnitTestBot window tick the classes or methods you'd like to cover with tests.

There are ways to configure the inner process of test generation. They are mostly intended for contributors' use, but sometimes they may be helpful for common usage. Try them if you are not satisfied with test coverage. Please make sure you've set everything back to defaults after finishing your experiments! Otherwise, you may have poor results during the next standard test generation.

Before configuring these settings, get to know the shortest UnitTestBot overview ever:

UnitTestBot has a dynamic symbolic execution engine in its core, complemented with a smart fuzzing technique. Fuzzer tries to "guess" values, which allow UnitTestBot to enter as many branches as possible. Dynamic symbolic engine in its turn tries to "deduce" the same. UnitTestBot starts its work with a bit of fuzzing, quickly generating inputs to cover some branches. Then the dynamic symbolic execution engine systematically explores the program's execution paths. It simultaneously executes the program on both the concrete and the symbolic values. These two ways of execution guide each other, trying to reach all the possible branches.

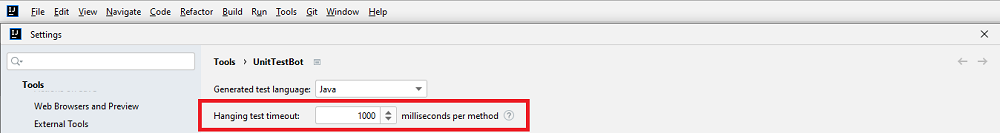

The symbolic engine generates parameters for the concrete execution — executing source code with concrete values. During concrete execution, if the engine enters an infinite loop or meet some other code conditions which take too much time to execute, the test being generated "hangs". It also means that the resulting test will hang when running and invoking the time-taking method.

Hanging test timeout is the limitation for the concrete execution process. Set this timeout to define which test is "hanging". Increase it to test the time-consuming method or decrease if the execution speed is critical for you.

Go to File > Settings > Tools > UnitTestBot and set timeout in milliseconds — at 50 milliseconds intervals.

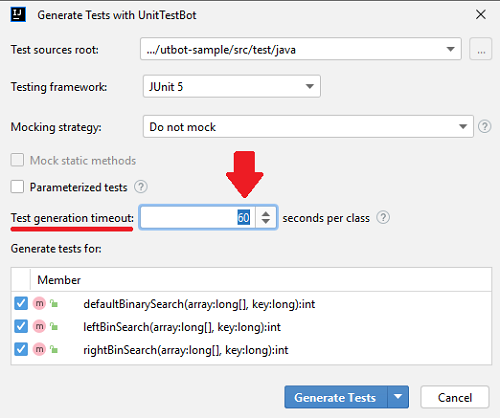

In the Generate Tests with UnitTestBot window you can set the timeout for both the fuzzing process and the symbolic engine to generate tests for a given class.

UnitTestBot starts its work with Fuzzing, switching to Symbolic execution later.

Go to File > Settings > Tools > UnitTestBot and choose the proportion of time allocated for each of these two methods within Test generation timeout per class.

The closer the slider is to each method (Fuzzing or Symbolic execution) the more time you allocate for it.

The default proportion is Fuzzing (5%) — Symbolic execution (95%).

You can adjust the way the generated tests behave and look like.

Go to File > Settings > Tools > UnitTestBot and choose the behavior for the tests with exceptions:

- Fail — tests that produce Runtime exceptions should fail.

- Pass — tests that produce Runtime exceptions should pass (by inserting throwable assertion).

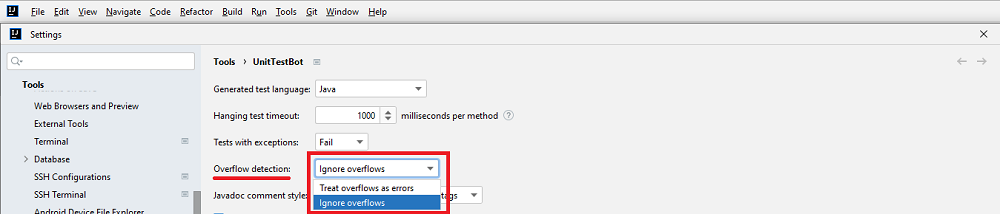

Go to File > Settings > Tools > UnitTestBot and choose the approach to overflows: whether UnitTestBot should Ignore overflows or Treat overflows as errors.

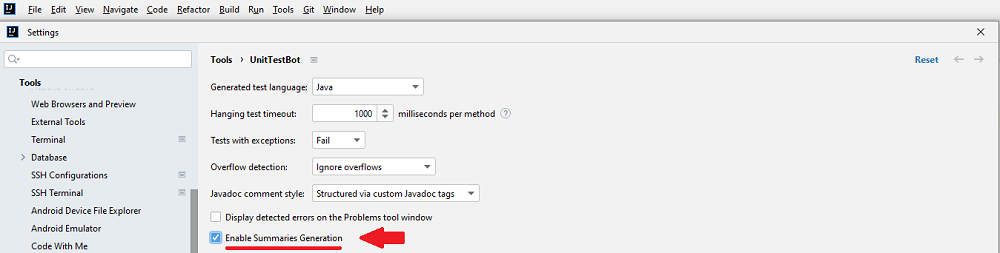

Go to File > Settings > Tools > UnitTestBot and choose to generate summaries if you want UnitTestBot to provide you with detailed test descriptions — see how to Read test descriptions for more information. You can also choose the style for them.

Note: currently, the feature is available only for generating tests in Java (not in Kotlin).

See Explore test suites to find more information on why you might need to change this configuration.

Each test description consists of:

- Cluster comments (regions)

- Javadoc comments

- Testing framework annotation (

@DisplayName) - Test method name

You can choose to display all or just a few of these test description elements. Nobody is expected to change these

settings manually, but in some rare cases you might need it. Go to {userHome}/.utbot/settings.properties and

uncomment the line to enable or disable the necessary setting:

enableClusterCommentsGenerationenableJavaDocGenerationenableDisplayNameGenerationenableTestNamesGeneration

Make sure to restart your IntelliJ IDEA and continue to generate tests as usual.

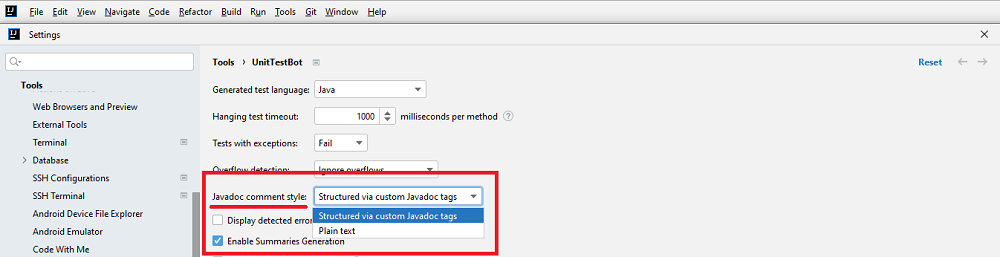

Go to File > Settings > Tools > UnitTestBot and choose the style for test descriptions:

- Structured via custom Javadoc tags — UnitTestBot uses custom Javadoc tags to concisely describe test execution path.

- Plain text — UnitTestBot briefly describes test execution path in plain text.

For more information and illustrations see Read test descriptions.

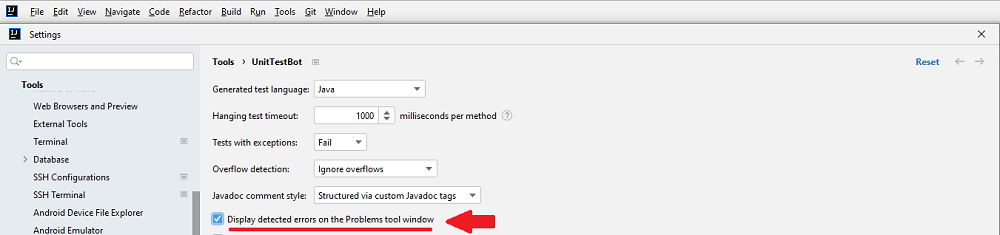

Go to File > Settings > Tools > UnitTestBot and choose to Display detected errors on the Problems tool window if you want your IntelliJ IDEA to automatically show you errors, registered in the SARIF report during test generation.

- Check system requirements

- Install or update plugin

- Generate tests with default configuration

- Fine-tune test generation

- Get use of test results

(redirect to /docs in main repo)

- Contributing guide

- Developer guide

- Naming and labeling conventions

- Interprocess debugging

- Interprocess logging

- UnitTestBot overall architecture

- Android Studio support

- Assumption mechanism

- Choosing language-specific IDE

- Code generation and rendering

- Fuzzing Platform (FP) Design

- Instrumented process API: handling errors and results

- UnitTestBot JavaScript plugin setup

- Night statistics monitoring

- RD for UnitTestBot

- Sandboxing

- UnitTestBot settings

- Speculative field non-nullability assumptions

- Symbolic analysis of static initializers

- Summarization module

- Taint analysis

- UnitTestBot decomposition

- UtUtils class

- UnitTestBot Family changes