Sign Up (1k chunks free) | PDF2MD | Hacker News Search Engine | Documentation | Meet a Maintainer | Discord | Matrix

- 🔒 Self-Hosting in your VPC or on-prem: We have full self-hosting guides for AWS, GCP, Kubernetes generally, and docker compose available on our documentation page here.

- 🧠 Semantic Dense Vector Search: Integrates with OpenAI or Jina embedding models and Qdrant to provide semantic vector search.

- 🔍 Typo Tolerant Full-Text/Neural Search: Every uploaded chunk is vector'ized with naver/efficient-splade-VI-BT-large-query for typo tolerant, quality neural sparse-vector search.

- 🖊️ Sub-Sentence Highlighting: Highlight the matching words or sentences within a chunk and bold them on search to enhance UX for your users. Shout out to the simsearch crate!

- 🌟 Recommendations: Find similar chunks (or files if using grouping) with the recommendation API. Very helpful if you have a platform where users' favorite, bookmark, or upvote content.

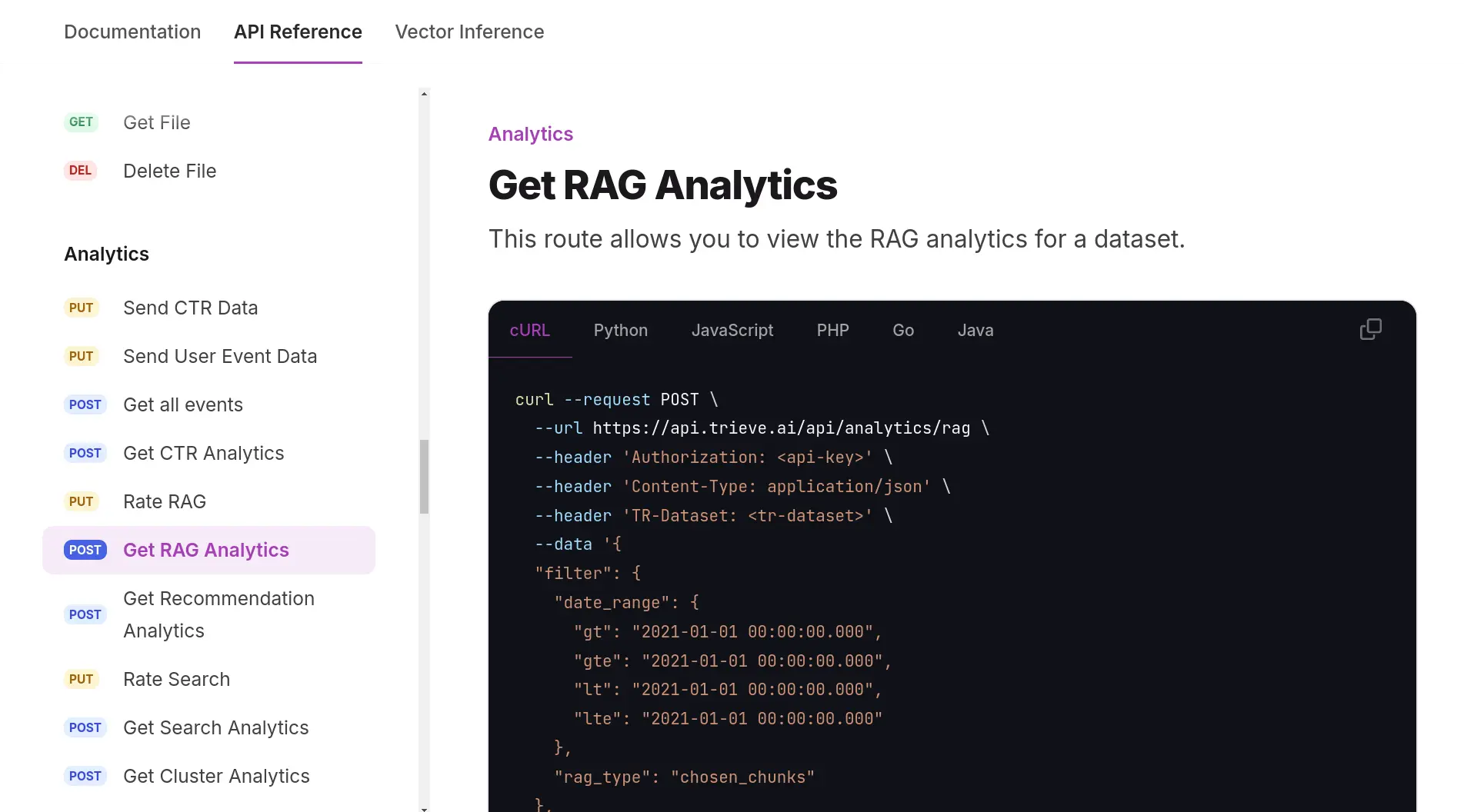

- 🤖 Convenient RAG API Routes: We integrate with OpenRouter to provide you with access to any LLM you would like for RAG. Try our routes for fully-managed RAG with topic-based memory management or select your own context RAG.

- 💼 Bring Your Own Models: If you'd like, you can bring your own text-embedding, SPLADE, cross-encoder re-ranking, and/or large-language model (LLM) and plug it into our infrastructure.

- 🔄 Hybrid Search with cross-encoder re-ranking: For the best results, use hybrid search with BAAI/bge-reranker-large re-rank optimization.

- 📆 Recency Biasing: Easily bias search results for what was most recent to prevent staleness

- 🛠️ Tunable Merchandizing: Adjust relevance using signals like clicks, add-to-carts, or citations

- 🕳️ Filtering: Date-range, substring match, tag, numeric, and other filter types are supported.

- 👥 Grouping: Mark multiple chunks as being part of the same file and search on the file-level such that the same top-level result never appears twice

Are we missing a feature that your use case would need? - call us at 628-222-4090, make a Github issue, or join the Matrix community and tell us! We are a small company who is still very hands-on and eager to build what you need; professional services are available.

sudo apt install curl \

gcc \

g++ \

make \

pkg-config \

python3 \

python3-pip \

libpq-dev \

libssl-dev \

opensslsudo pacman -S base-devel postgresql-libsYou can install NVM using its install script.

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.39.5/install.sh | bash

You should restart the terminal to update bash profile with NVM. Then, you can install NodeJS LTS release and Yarn.

nvm install --lts

npm install -g yarn

mkdir server/tmp

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

cargo install cargo-watch

cp .env.analytics ./frontends/analytics/.env

cp .env.chat ./frontends/chat/.env

cp .env.search ./frontends/search/.env

cp .env.server ./server/.env

cp .env.dashboard ./frontends/dashboard/.env

Here is a guide for acquiring that.

- Open the

./server/.envfile - Replace the value for

LLM_API_KEYto be your own OpenAI API key. - Replace the value for

OPENAI_API_KEYto be your own OpenAI API key.

cat .env.chat .env.search .env.server .env.docker-compose > .env

./convenience.sh -l

We recommend managing this through tmuxp, see the guide here or terminal tabs.

cd clients/ts-sdk

yarn build

cd frontends

yarn

yarn dev

cd server

cargo watch -x run

cd server

cargo run --bin ingestion-worker

cd server

cargo run --bin file-worker

cd server

cargo run --bin delete-worker

cd search

yarn

yarn dev

- check that you can see redoc with the OpenAPI reference at localhost:8090/redoc

- make an account create a dataset with test data at localhost:5173

- search that dataset with test data at localhost:5174

Reach out to us on discord for assistance. We are available and more than happy to assist.

diesel::debug_query(&query).to_string();

Install Stripe CLI.

stripe loginstripe listen --forward-to localhost:8090/api/stripe/webhook- set the

STRIPE_WEBHOOK_SECRETin theserver/.envto the resulting webhook signing secret stripe products create --name trieve --default-price-data.unit-amount 1200 --default-price-data.currency usdstripe plans create --amount=1200 --currency=usd --interval=month --product={id from response of step 3}